Uncovering the Infrastructure of AI’s Cambrian Explosion

As we stand on the precipice of a new digital frontier, knowing the landscape providing the substrate for growth helps. Built on entities that have carefully nurtured the field of AI, transforming it from mere abstract ideas to a tangible generative force. This blog post aims to illuminate this odyssey, spotlighting the key players in this technological revolution. From the might of NVIDIA’s GPUs to the burgeoning ecosystem of Hugging Face, let’s let’s dive into the rich substrate that is the generative AI landscape.

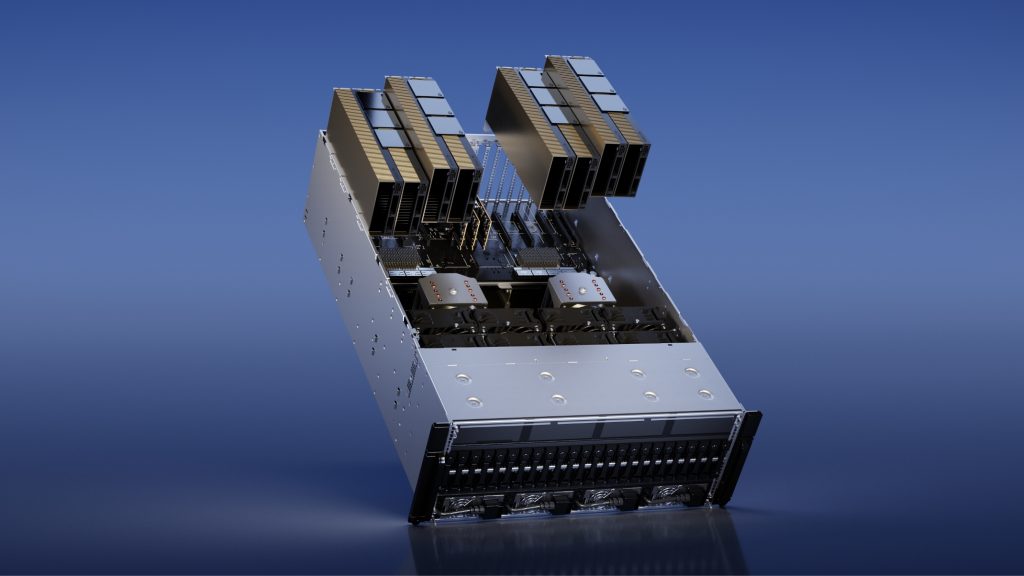

When it comes to the picks and shovels, everything depends on powerful Graphics Processing Units (GPUs). With nearly 25 years in the GPU game, NVIDIA is the undisputed king of the GPUs. While GPUs originally were intended for high performance 3D graphics for games, NVIDIA’s CUDA library paved the way for AI researchers to leverage the high performance computing power of GPUs when released over 15 years ago.

The previous flagship GPU NVIDIA A100 is still powering most of the world’s generative AI applications. The GPUs power both the training of models and the inference part during usage. It is estimated that between 10–20k A100 GPUs were used for 4–7 months to train Open AI’s GPT-4 model. While several FAANG companies have developed their own chips for AI, NVIDIA is still maintaining leadership with H100, the new flagship GPU which offers several times improved performance over the A100.

While the hardware is crucial, software plays an equally pivotal role, which leads us to what really helped propel the current AI hyper growth. First, let’s look at the cheerful Hugging Face 🤗 a New York startup founded in 2016 initially developing a chatbot app for bored teens.

Hugging Face started to open source the code powering their chatbot and after releasing the Transformers library quickly pivoted to becoming a community and platform for AI research.

Today Hugging Face is frequently called the Github of AI and is a true AI powerhouse with the largest repository of state-of-the-art AI models, code and libraries. Last year in May, Hugging Face took in a Series C investment of $100M at a valuation of $1B.

The Hugging Face hub is now the center of gravity for open source AI. All stable diffusion models from adjacent startups like Stability AI and RunwayML were originally uploaded to the hub and it now boasts over 200k models and over 1M weekly active users as of last year.

Beyond the hub and the transformers library, Hugging Face maintains an additional couple of high impact technologies, like Gradio and the Diffusers library. Both hugely important for the recent explosion of open source AI.

Gradio is a python application which provides a clean interface to machine learning models (for you R data nerds out there it’s a bit like Shiny). Gradio is integrated with the Hugging Face hub but can be used stand-alone too. While providing an interface to machine learning code might seem like a quick hack, the app has been hugely important to demonstrate the fast paced progress in generative AI—an interactive experience makes it much easier to grasp what the breakthrough methods published in research papers actually do.

The Diffusers library is a toolbox for diffusion models for generating images, videos, audio and more. With only a few lines of code, the library is integrated with the Hugging Face hub which makes downloading models a breeze and it enables developers to easily explore and implement state-of-the-art pre-trained models. If you want to see for yourself, the latest example I’ve played with is this text-to-audio example notebook.

On that note, Google Colab, or Colaboratory, gets a notable mention too. It is a research tool that combined a notebook programming interface with GPU powered instances to provide easy access to high performance computing for the masses. has been and continues to be an important enabler for AI research and tinkering, even as competitors like Kaggle are catching up.