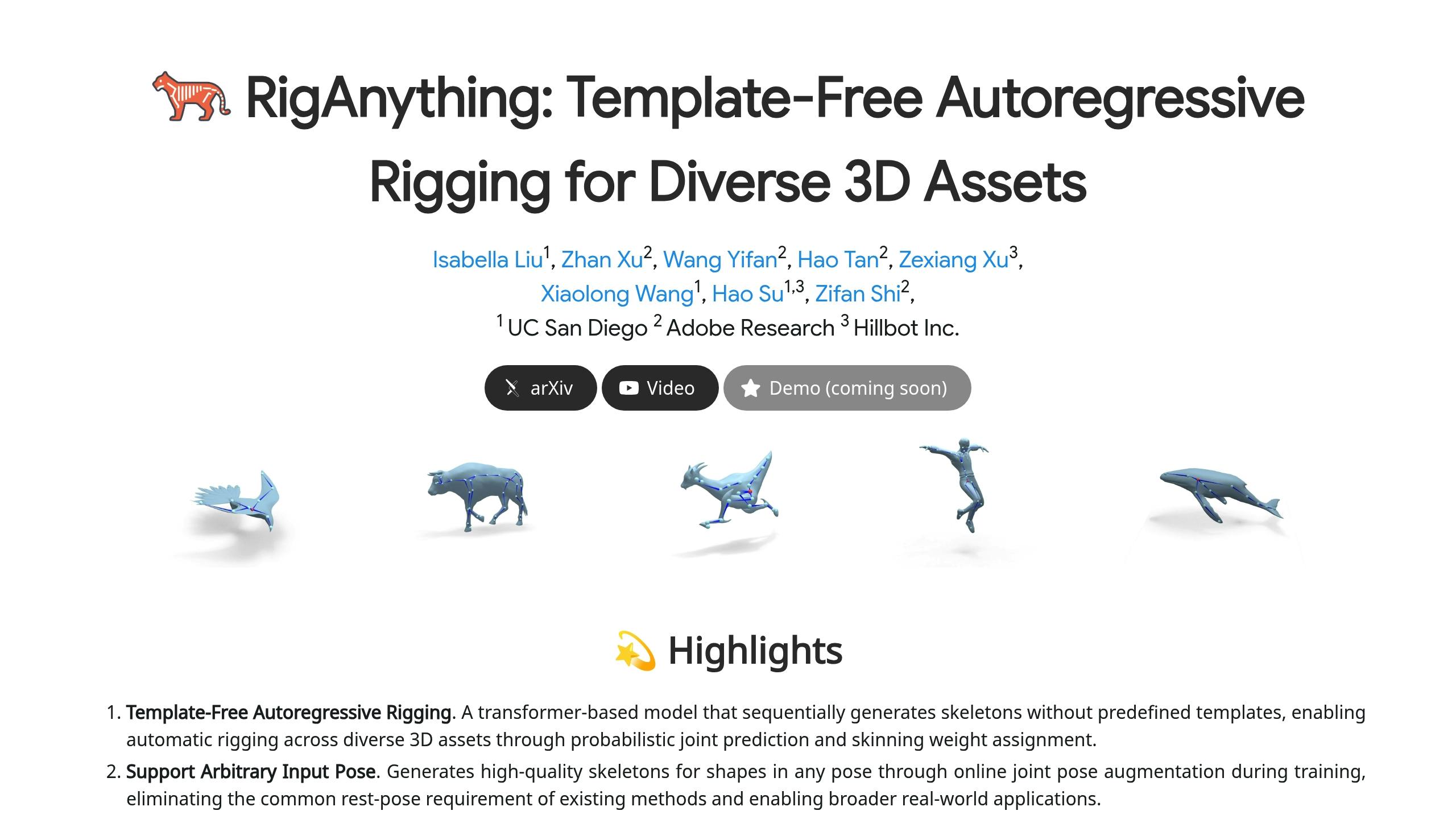

RigAnything is Adobe Research‘s new AI-powered tool that simplifies 3D asset rigging by automating joint creation, skeleton structures, and skinning weights without relying on templates. It works with diverse objects, from humanoid figures to insects, and delivers fast, accurate results. Here’s why it matters:

- Speed: Processes models in just 2.5 seconds with 95% skeleton accuracy.

- Flexibility: Handles any 3D object type, including pre-posed assets.

- Efficiency: Reduces game development character setup time by 70%.

Quick Comparison:

| Feature | Traditional Methods | RigNet | RigAnything |

|---|---|---|---|

| Processing Time | Variable | ~2 minutes/object | 2.5 seconds/object |

| Pose Flexibility | Limited | Rest poses only | Multiple poses |

| Object Range | Specific categories | Limited | Any object type |

| Training Approach | N/A | Non-differentiable | End-to-end |

| Joint Prediction | Fixed templates | Clustering-based | AI-driven iterative |

While it currently lacks precise regional control and material-aware skinning, planned updates will address these issues, making RigAnything a promising tool for automating labor-intensive 3D animation workflows.

Related video from YouTube

How RigAnything Works

RigAnything takes 3D asset rigging to a new level with its AI-driven architecture, building on the BFS ordering system mentioned earlier. Using an autoregressive transformer-based model, it predicts joint positions step-by-step, relying on global shape context and past predictions [1].

AI-Powered Rigging Without Templates

At the heart of RigAnything is its BFS ordering system, which generates skeletal hierarchies by analyzing 3D coordinate sequences and parent indices [1]. Diffusion modeling ensures accurate joint placement, creating well-structured skeletons [1].

This system also calculates weight distribution for the entire model simultaneously [1]. The result? Smoother, more realistic deformations during animation, even for complex shapes.

RigAnything vs. Current Methods

Here’s how RigAnything stacks up against other approaches:

| Feature | Traditional | RigNet | RigAnything |

|---|---|---|---|

| Processing Time | Variable | ~2 minutes per object | Faster |

| Pose Flexibility | Limited | Rest poses only | Supports multiple poses and pre-posed assets |

| Object Range | Specific categories | Limited | Works with any type of object |

| Training Approach | N/A | Non-differentiable | End-to-end differentiable |

| Joint Prediction | Fixed templates | Clustering-based | AI-powered iterative prediction |

RigAnything’s differentiable pipeline is a major improvement over RigNet’s non-differentiable methods like clustering and minimum spanning tree construction [1]. This approach not only speeds up processing but also delivers production-ready results. Its ability to handle diverse assets efficiently makes it a game-changer for animation workflows.

Technical Details of RigAnything

RigAnything’s design tackles two main hurdles: ensuring precise joint systems and making the training process scalable.

Joint Systems and Skin Weight Analysis

At its core, RigAnything aligns with the goal of achieving automation without relying on templates. A transformer model is used to predict BFS-ordered joint sequences, which represent 3D locations and parent indices in a sequential format [1][3].

For weight calculations, the system evaluates all joints together to produce realistic deformations [1]. This method stands apart from older techniques that focus on joints one at a time.

Dataset and Training Process

The model is trained using data from RigNet’s professional rigs and Objaverse‘s diverse asset collection. This combination ensures accuracy while allowing the system to handle a wide range of models, from polished professional designs to more experimental creations.

| Dataset | Content Type | Purpose |

|---|---|---|

| RigNet | Pre-rigged 3D models | Defines rigging patterns |

| Objaverse | Various 3D assets | Expands applicability across categories |

This approach enables the system to manage a huge variety of objects, including humanoid figures, marine animals, and even insects [1][3].

sbb-itb-5392f3d

Results and Use Cases

Speed and Accuracy Tests

RigAnything delivers on its promise to simplify animation’s most time-consuming task – rigging – through automation. It processes models in just 2.5 seconds with a minimal joint placement error of 0.03 units (in standardized 3D space), achieving 95% skeleton accuracy when compared to professional rigs [1][3].

This leap in efficiency makes it a game-changer for various industries.

Industry Applications

By automating tasks that once required significant manual effort, RigAnything boosts productivity in several fields:

- Visual effects: Speeds up creature rigging by 50-60% [3][4].

- Game development: Cuts character setup time by 70% [2].

- Indie and small studios: Increases productivity by 30-40% by lowering technical barriers [4].

Future plans include integrating RigAnything with Adobe Substance 3D tools, allowing direct rigging within modeling environments [2][4]. This aligns with the goal of making rigging accessible across a wide range of asset types, as discussed in the Technical Details section.

Current Limits and Next Steps

Technical Constraints

RigAnything has made strides in speeding up production, but it still faces three main challenges:

-

Limited Regional Control

The model struggles to offer precise control over rig complexity. This makes it hard for artists to adjust motion control in specific areas, like heads and hands, where detailed articulation is crucial [1]. -

Geometry-Only Focus

By relying solely on geometric data for rigging, RigAnything can misinterpret certain areas. Without factoring in texture data, the system may fail to make accurate rigging decisions in cases where geometry alone doesn’t provide enough information [1]. -

Material-Blind Skinning

The current system doesn’t account for how different materials affect motion. This results in less realistic movement and deformation for objects with varying material properties [1].

Future Development Plans

The team has outlined several upgrades to address these issues and broaden RigAnything’s applications.

Improved Control Options

A planned conditional network system will allow artists to define rigging complexity for specific regions, giving them more flexibility [1].

Multi-Modal Data Integration

Future updates will combine geometric and texture data analysis. This approach aims to enhance joint detection and improve skinning precision [1][3].

Boosting Performance

To make processing faster and more efficient, the team is working on:

- Using advanced transformer architectures

- Creating multi-resolution techniques for handling complex models

- Adding cloud-based processing capabilities [2][4]

"The main obstacle is the scarcity of high-quality dynamic data for diverse object types and materials. As a result, the team is investigating methods for generating synthetic motion data or new capture techniques [1]."

Collaborations with animation studios are also in the works to expand training datasets for material-aware skinning [1]. Tackling these challenges will bring RigAnything closer to its goal of providing fully automated rigging for all 3D assets.

Conclusion

RigAnything showcases how AI can reshape animation workflows, thanks to its autoregressive transformer model. This approach delivers better quality and efficiency compared to older methods [1][3].

By using a breadth-first search method and diffusion modeling, the system ensures accurate skeleton generation while maintaining spatial and hierarchical relationships [1][3]. Its template-free design and strong spatial understanding mark a step forward in 3D content creation [1].

Although there are current limitations in control, as mentioned earlier, the system’s ability to process tasks in minutes has the potential to streamline production pipelines significantly, as shown in the Results and Use Cases section [1][3].

Adobe Research is actively working on addressing these challenges by improving data integration and dynamic modeling. Planned advancements in multi-modal processing and skinning weight prediction further strengthen RigAnything’s role in automated rigging, as outlined in the Introduction [1][2].