Let’s continue our exploration of the underreported yet fascinating world of generative AI, focusing today on Neural Radiance Fields (NeRFs). NeRFs are a significant advancement in generative AI, capable of creating 3D scenes from 2D images, offering huge potential in streamlining laborious 3D modelling processes. Since their introduction in 2020, over 500 papers have been published to improve upon this technology, with notable implementations including HumanRF, Zip-NeRF, and commercial apps like Luma AI.

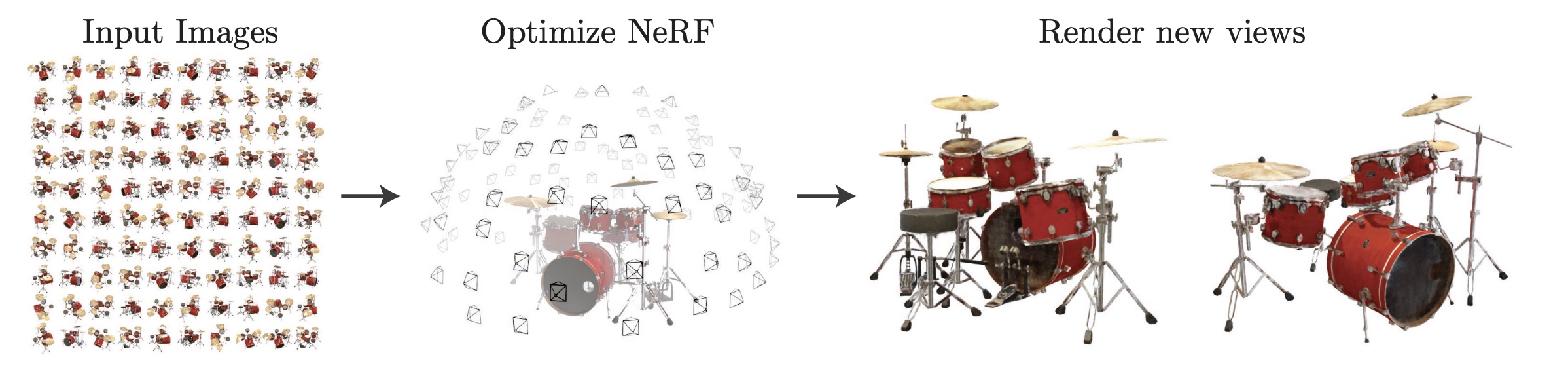

So what’s a NeRF then? It’s a neural network designed to generate a 3D scene from one or more 2D images. The method was introduced in this 2020 paper from researchers at University of California and Google Research. In very simple terms, the network is given 3D coordinates and a viewing direction. It then generates color and transparency, which are compared to actual images during training until it accurately recreates the scene. Once the NeRF is trained, any view can be rendered from it, even views that are not seen in the input images. This is called novel view synthesis and is also the reason why NeRFs are considered generative AI models.

Why do NeRFs matter? For starters, 3D modelling is labor-intensive and arduous. However, NeRFs, even in their early stages, show great promise in significantly simplifying these processes, reducing reliance on advanced 3D software and sensor equipment. 3D capture is considered a big deal too, with for example Matterport having a +800M dollar market cap.

Since the initial paper from 2020, over 500 more have been posted on arXiv, building and improving on the initial architecture and method.

Keen on trying out NeRF yourself? Here’s a couple of links to get you going using Instant Neural Graphics Primitives (instant-ngp), which is able to swiftly trains NeRFs. Several mobile apps exist to make it easy to generate the spatial information data needed to train NeRFs. NeRF Capture is one example on the iOS app store that streams data directly to instant-ngp.

The Instant-ngp repository lists two other video-based techniques to generate NeRFs, namely COLMAP and Record3D. Another popular NeRF implementation is F2 NeRF, which can handle arbitrary camera trajectories as input.

To round off, let’s examine a few examples that expand upon and build on the original research.

- HumanRF is an interesting implementation, which uses NeRFs for high fidelity motion capture and rendering

- Zip-NeRF improves the quality by introducing antialias to remove jaggies or missing scene content

- In the commercial space, Luma AI has gotten some traction after the launch little over a year and a half ago

- While most techniques so far have relied on videos or multiple images to train the NeRFs, there are also methods that utilize a single image like the recent NerfDiff

To conclude, while NeRFs are bleeding edge today, they are very promising and could potentially contribute greatly to converting our physical space to digital ones in ways that are difficult to foresee.