OMG, what a time for Large Language Models (LLMs)!

Before getting to what must be considered the biggest news, the announcement from Google about rolling out an API for PaLM, a huge 540B model intended to generalize across domains and tasks, might have slipped off the radar. The API is still in closed beta, as Google is very cautious in releasing this sort of technology, in contrast to the guns-blazing approach of OpenAI. 🤠 Even if slow, more competition in this space will surely benefit the wider community.

With that out of the way, OpenAI started to roll out GPT-4 (don’t pronounce that with a faux-French accent 💨) in a big way. This next model is a great leap forward from earlier multimodal LLMs as well as earlier GPT models. Compared to GPT-3.5, this version is significantly more capable across multiple domains. GPT-4, as a text-only model, is available for GPT Plus subscribers, and the API has a waitlist.

Why is this huge news? Well, the multimodal capability can unlock new transformative workflows. See, for example the new model take a napkin sketch as input and generates a fully working HTML implementation.

Its coding capabilities have grown so much that it is now able to code simple web games, see below.

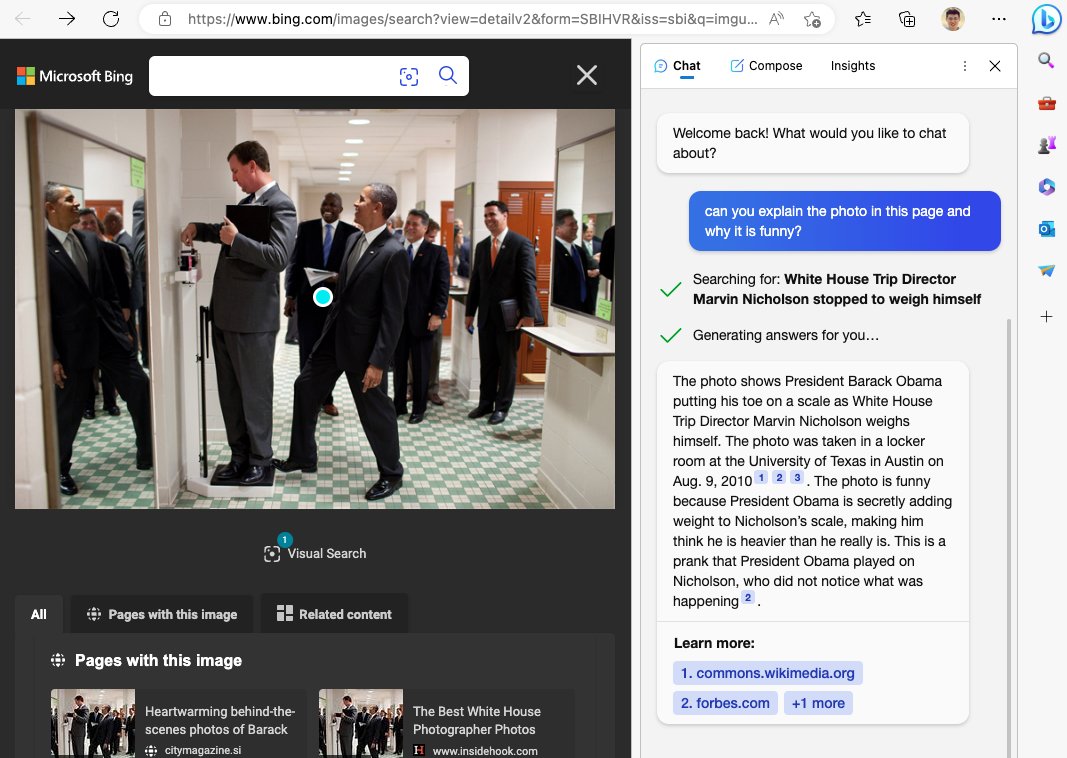

Finally, after the ten-year-old Karpathy example of AI and computer vision being unable to understand the humor in a picture in which Obama tilts the scale, these new models are actually capable of inferring humor.

Perhaps naively, I certainly didn’t expect Chomsky to weigh in on LLMs, but hey, here we are. While I fully agree with him that human and LLM learning are fundamentally different, it does not detract from the enormous potential of this technology, and I think the good old Chomsk’ underestimates the impact it will have.

Talking about throwing weight(s) around, many online were saying that LLMs were having their stable diffusion moment last week. This followed the news that the weights for LLaMA, the open-source-ish model from Meta, had been leaked online. While some fret, like this writer for The Verge, this leak has spurred great innovation already, like a small version of the LLM running on a Raspberry Pi 🤯