Looking for the best open-source beat detection models? Here’s a quick guide to help you choose the right tool for your needs. Beat detection is crucial for syncing music with visuals, video editing, and live performances. This article breaks down the top models, their strengths, and use cases.

Key Models and Features:

- BeatNet: Real-time & offline processing, neural network-based, versatile for various applications.

- BEAST: Real-time tracking, uses Bayesian networks, great for live performances.

- Madmom: Offline-focused, combines RNNs with signal processing, ideal for detailed analysis.

Applications:

- Live performances: Sync music with lighting and effects.

- Video production: Automate rhythm-based edits and transitions.

- Interactive installations: Create music-responsive experiences.

Challenges in Beat Detection:

- Handling varied rhythms and BPM shifts.

- Dealing with noisy or low-quality audio.

- Balancing real-time performance with accuracy.

Quick Comparison Table:

| Feature | BeatNet | BEAST | Madmom |

|---|---|---|---|

| Processing Modes | Real-time & Offline | Real-time | Offline only |

| Core Technology | Neural Networks | Bayesian Networks | RNN + Signal Processing |

| Best Use Case | Versatile Applications | Live Performance | Audio Analysis |

| Latency | <50ms | <100ms | Offline focus |

Whether you need real-time precision or offline accuracy, this guide has you covered. Keep reading to dive deeper into the tools, examples, and practical applications.

Key Concepts and Metrics for Beat Detection

Basic Concepts of Beat Detection

Beat detection revolves around three main steps: onset detection, tempo estimation, and beat tracking. Onset detection lays the groundwork by identifying rhythmic patterns, which then guide tempo estimation and beat tracking. Together, these steps pinpoint the rhythmic pulse in audio that makes people tap their feet.

Here’s a quick look at how a beat detection system processes audio:

# Example of beat detection using madmom

from madmom.features.beats import RNNBeatProcessor

from madmom.features.beats import BeatTrackingProcessor

# Create processors

rnn_processor = RNNBeatProcessor()

beat_tracker = BeatTrackingProcessor(fps=100)

# Process audio and detect beats

beats = beat_tracker(rnn_processor(audio_file))

Challenges in Beat Detection

The RhythmExtractor2013 algorithm highlights common hurdles with its multifeature and degara methods. Here’s a breakdown of typical challenges:

| Challenge | Impact | Solution Approach |

|---|---|---|

| Varied Rhythms | Lower accuracy | Multi-feature analysis |

| BPM Shifts | Disrupted detection | Adaptive tracking systems |

| Audio Quality | Increased false positives | Advanced preprocessing |

| Real-time Processing | Latency issues | Optimized algorithms |

Even with these obstacles, standardized evaluation methods play a key role in comparing model performance effectively.

Evaluation Metrics

The Music Information Retrieval Evaluation eXchange (MIREX) provides a set of metrics to evaluate beat detection systems.

"The choice of the evaluation method can have a significant impact on the relative performance of different beat tracking algorithms." – M. E. P. Davies, N. Degara, and M. D. Plumbley

This quote underscores how critical it is to select the right metrics for fair and meaningful comparisons.

Some of the most widely used metrics include:

- F-measure: Evaluates accuracy within a +/- 70ms window.

- Cemgil Score: Uses a Gaussian window with a 40ms standard deviation.

- P-score: Measures the correlation between detected and annotated beats.

- Information Gain: Applies Kullback-Leibler divergence to compare beat sequences.

These metrics are essential for assessing how well models handle challenges like tempo shifts, genre diversity, and noisy audio. They offer a structured way to evaluate performance across different musical styles and applications.

BeatNet automatic music beat, downbeat and meter tracking Demo

Leading Open Source Beat Detection Models

Let’s take a closer look at some of the top open-source beat detection models, highlighting their features and use cases.

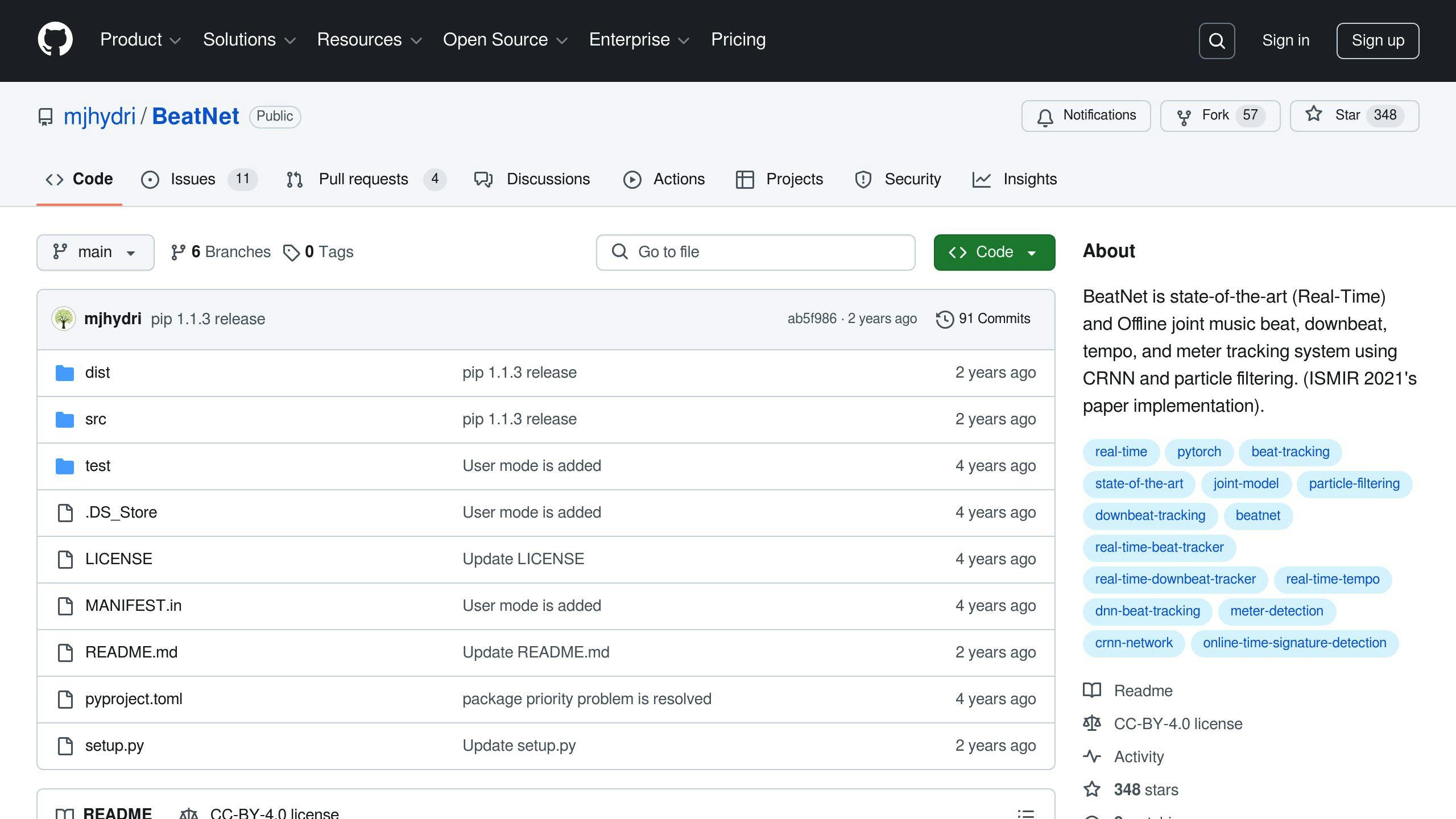

BeatNet

BeatNet is a standout in AI-driven beat detection, offering four modes: streaming, real-time, online, and offline [2]. Its neural network-based design handles even the most complex rhythms with ease.

Here’s a quick example of how to use BeatNet in Python:

import tensorflow as tf

from tensorflow.keras.models import load_model

import librosa

# Load the pre-trained BeatNet model

model = load_model('beatnet_model.h5')

# Preprocess audio file

audio_file = 'music.wav'

y, sr = librosa.load(audio_file, sr=22050)

spectrogram = librosa.feature.melspectrogram(y, sr=sr)

# Predict beats

beats = model.predict(spectrogram)

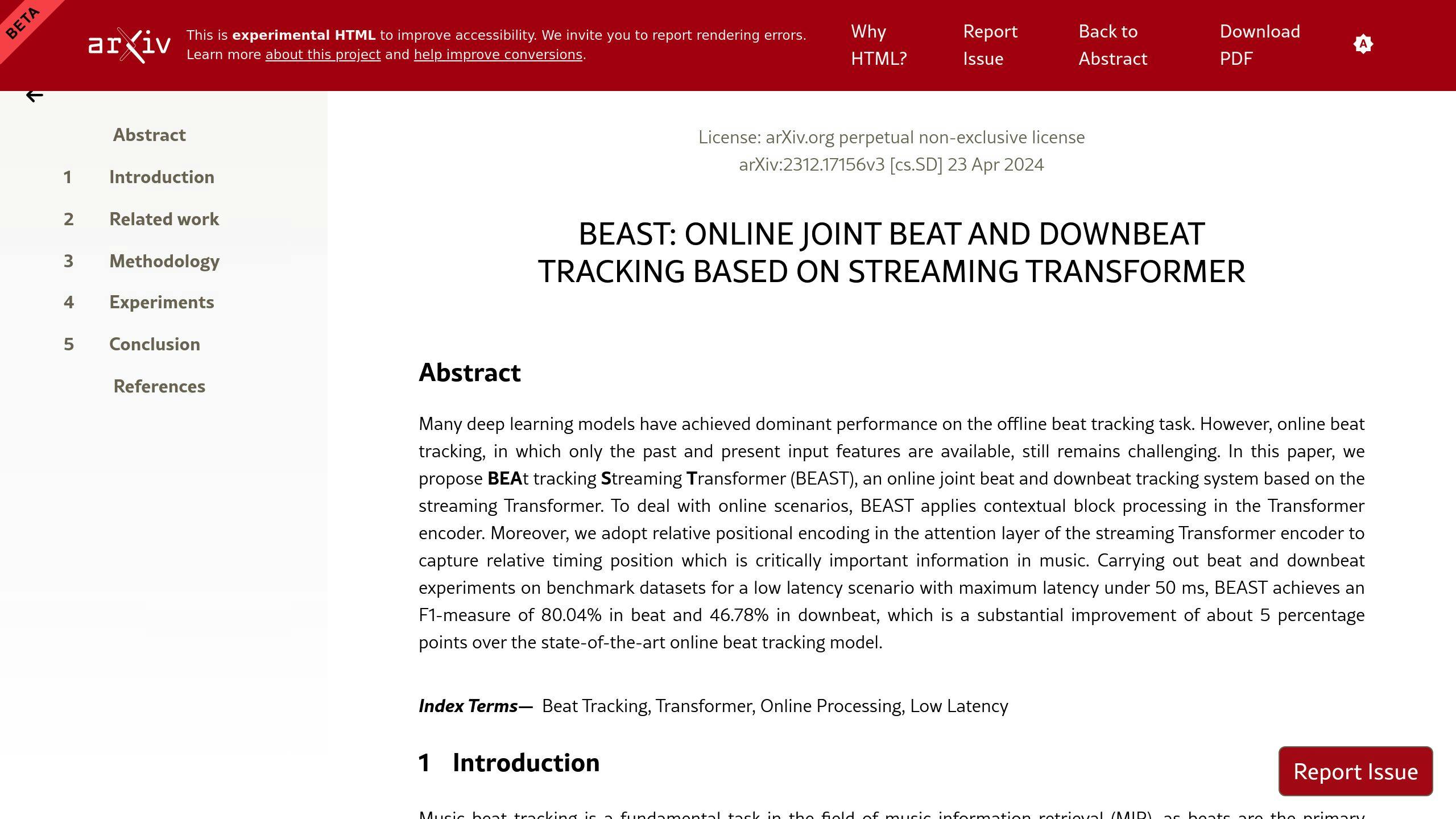

BEAST

BEAST is designed for real-time beat tracking, leveraging probabilistic models to predict beats. Its use of Bayesian networks makes it especially reliable when handling tempo changes.

Here’s how you can implement BEAST in Python:

import pyaudio

from beast import BeatTracker

# Initialize BEAST tracker

tracker = BeatTracker()

# Capture audio stream using PyAudio

def get_audio_input():

# Example implementation for capturing audio

p = pyaudio.PyAudio()

stream = p.open(format=pyaudio.paInt16, channels=1, rate=44100, input=True, frames_per_buffer=1024)

return stream.read(1024)

# Process real-time audio stream

audio_chunk = get_audio_input()

beats = tracker.process(audio_chunk)

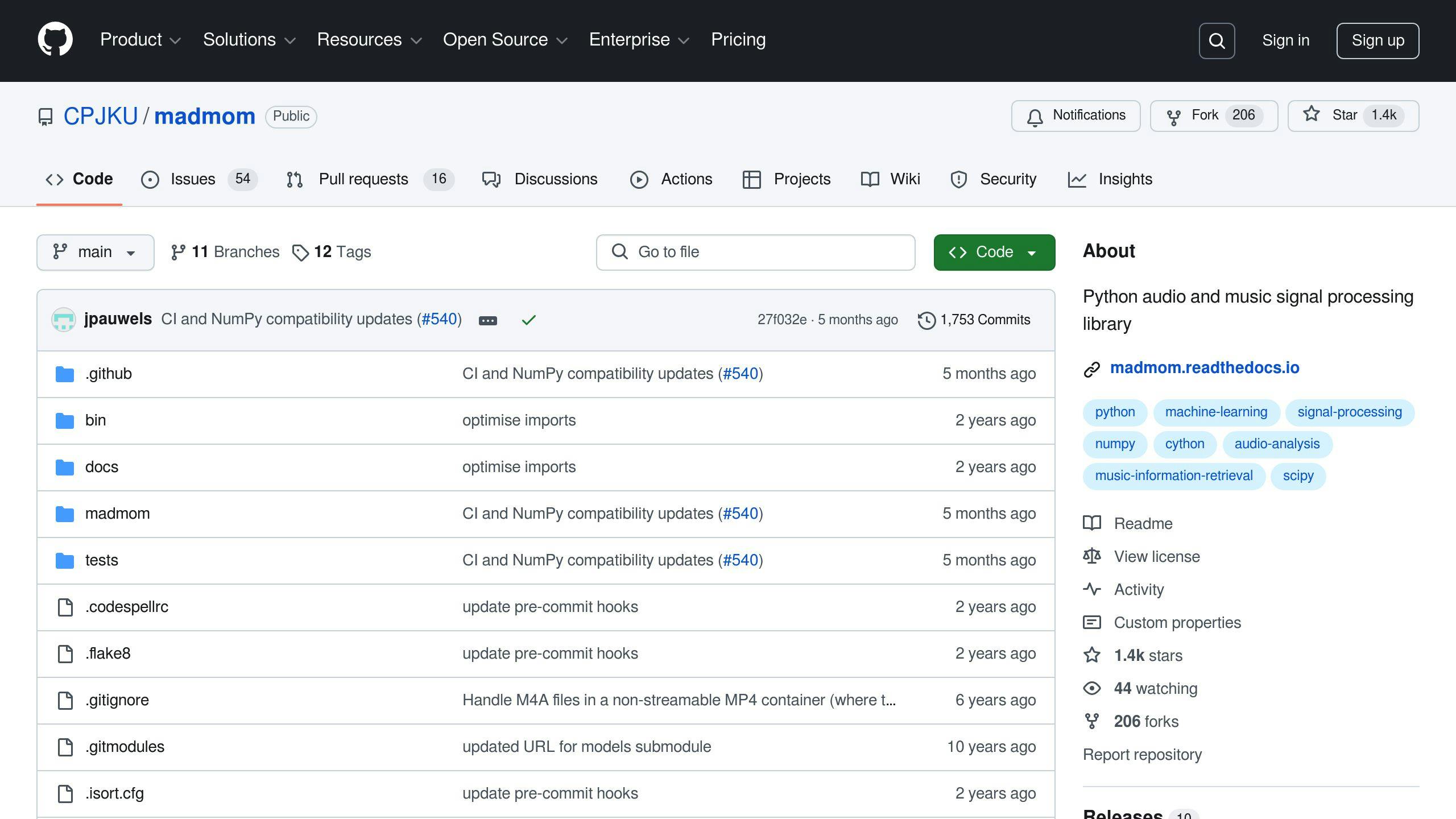

Madmom

Madmom blends machine learning with traditional signal processing techniques and has been recognized in beat tracking competitions [4][3]. It’s particularly effective for offline tasks like batch processing or detailed rhythmic analysis.

Here’s a simple implementation:

from madmom.features.beats import RNNBeatProcessor

from madmom.features.beats import BeatTrackingProcessor

# Initialize processors

rnn = RNNBeatProcessor()

tracker = BeatTrackingProcessor(fps=100)

# Process audio file

beats = tracker(rnn('song.wav'))

Feature Comparison

Here’s a quick comparison of these models to help you decide which one suits your needs:

| Feature | BeatNet | BEAST | Madmom |

|---|---|---|---|

| Processing Modes | Real-time & Offline | Real-time | Offline (primary) |

| Core Technology | Neural Networks | Bayesian Networks | RNN + Signal Processing |

| Best Use Case | Versatile Applications | Live Performance | Audio Analysis |

| Integration Complexity | Moderate | Low | Low |

Each of these tools shines in different scenarios. BeatNet is a go-to for its versatility, BEAST is ideal for live settings, and Madmom is perfect for offline tasks. Depending on your project needs, one of these models is likely to be a great fit.

sbb-itb-5392f3d

Model Comparison: Performance and Usability

Accuracy Across Music Genres

Beat detection models show varying levels of accuracy depending on the musical style. Madmom’s multi-model setup utilizes separate RNNs tailored for specific genres, delivering consistent results even with complex rhythms [3].

| Genre | BeatNet | BEAST | Madmom |

|---|---|---|---|

| Classical | High | Moderate | Very High |

| Electronic | Very High | High | High |

| Jazz | Moderate | Moderate | High |

| Rock/Pop | High | High | High |

| Complex Rhythms | High | Moderate | Very High |

When choosing a model, it’s not just about accuracy – whether you need real-time or offline processing is equally important.

Real-Time vs Offline Processing

Here’s a breakdown of processing capabilities:

| Model Feature | BeatNet | BEAST | Madmom |

|---|---|---|---|

| Latency | <50ms | <100ms | Offline focus |

| CPU Usage | Moderate | Low | High |

| Memory Footprint | Medium | Low | Large |

| Batch Processing | Supported | Limited | Excellent |

| Genre Handling | Flexible | Good | Excellent |

| Real-time Performance | Excellent | Good | Limited |

BeatNet is ideal for live scenarios thanks to its low latency and efficient performance. On the other hand, Madmom is better suited for offline tasks, where its batch processing and genre-specific accuracy shine [2].

Ease of Use and Integration

Advancements in deep learning have made beat tracking more precise and user-friendly. Each model has unique strengths when it comes to integration:

- BeatNet: Offers flexibility with multiple operating modes.

- BEAST: Stands out for its simplicity and minimal dependencies.

- Madmom: Comes with detailed documentation and strong community backing.

| Key Integration Features | BeatNet | BEAST | Madmom |

|---|---|---|---|

| Documentation Quality | Extensive | Basic | Comprehensive |

| Community Support | Active | Limited | Very Active |

| Setup Complexity | Moderate | Low | Moderate |

These features play a big role in determining how practical each model is for live performances, video production, or other workflows.

Practical Uses and Implementation

Live Performance Applications

Real-time beat detection plays a key role in syncing audio with lighting and visual effects during live shows. Here’s a Python example using BeatNet to detect beats in real time:

import beatnet

import pyaudio

# Initialize BeatNet in real-time mode

model = beatnet.BeatNet(mode='realtime')

def process_audio_chunk(audio_chunk):

beats = model.process_frame(audio_chunk)

if beats:

# Trigger lighting or visual effects based on detected beats

For interactive art installations, Madmom is a strong choice due to its accuracy in controlled environments where consistent performance and processing power are available.

While live performances need real-time precision, video production can rely on tools designed for offline editing and synchronization.

Video Production Applications

BEAST’s lightweight design is ideal for automating video cuts and syncing with audio. Here’s an example of how video producers can use BEAST:

from beast import BeatTracker

import moviepy.editor as mp

def sync_video_to_beats(audio_file, video_file):

tracker = BeatTracker()

beats = tracker.process_file(audio_file)

# Cut video at beat points

video = mp.VideoFileClip(video_file)

cuts = [video.subclip(t1, t2) for t1, t2 in zip(beats[:-1], beats[1:])]

This approach simplifies video editing by automating rhythm-based cuts, saving time and effort during post-production.

"The emergence of deep learning in MIR overturned the formulation of the beat tracking task, enabling direct beat detection from audio data" [1][2]

Integration with Other Tools

Beat detection models can also work alongside other tools to expand their functionality. Here’s a quick comparison of integration methods:

| Integration Method | Use Case and Benefit |

|---|---|

| Python Scripts | Offers flexibility for custom workflows |

| VST Plugins | Seamless operation within DAW environments |

| REST APIs | Enables platform-independent web applications |

For example, combining BeatNet with other audio processing tools can enhance functionality:

# Example of combining BeatNet with audio processing

import librosa

import numpy as np

def process_mixed_audio(audio_path):

# Load audio with librosa

y, sr = librosa.load(audio_path)

# Process with BeatNet

beats = beatnet.process(y)

# Apply additional processing

onset_env = librosa.onset.onset_strength(y=y, sr=sr)

When integrating beat detection into existing systems, stick to standardized formats and efficient data handling to ensure smooth performance. These methods make it easier to build workflows that serve both creative and technical needs.

Conclusion and Key Points

Choosing the Right Model

Selecting the best beat detection model comes down to your specific needs and technical constraints. BeatNet is a standout choice for real-time scenarios like live performances, while Madmom shines in offline tasks such as video editing. For specialized tasks, RhythmExtractor2013 and LoopBpmEstimator are great options, focusing on tempo estimation and short audio loops, respectively.

"BeatNet is state-of-the-art (Real-Time) and Offline joint music beat, downbeat, tempo, and meter tracking system using CRNN and particle filtering."

When deciding, think about your technical setup and processing power. For instance, RhythmExtractor2013 provides two modes: the slower, more precise multifeature mode and the quicker, less resource-intensive degara mode. This gives you flexibility to prioritize either accuracy or speed based on your needs.

Finally, keep an eye on how new advancements in beat detection could influence your future projects.

Future Trends in Beat Detection

The world of beat detection is advancing quickly, thanks to progress in deep learning and signal processing. These innovations are paving the way for systems that can better handle intricate musical patterns and challenging environments.

Some promising developments include:

- Smarter neural networks for higher precision

- Models that can process multi-track audio

- Improved real-time performance for live settings

- Efforts to lower computational demands without sacrificing accuracy

These trends are set to make beat detection tools even more effective and user-friendly, enhancing workflows for live performances, video production, and beyond. As the technology evolves, it’s opening doors to more reliable and accessible solutions across various applications.