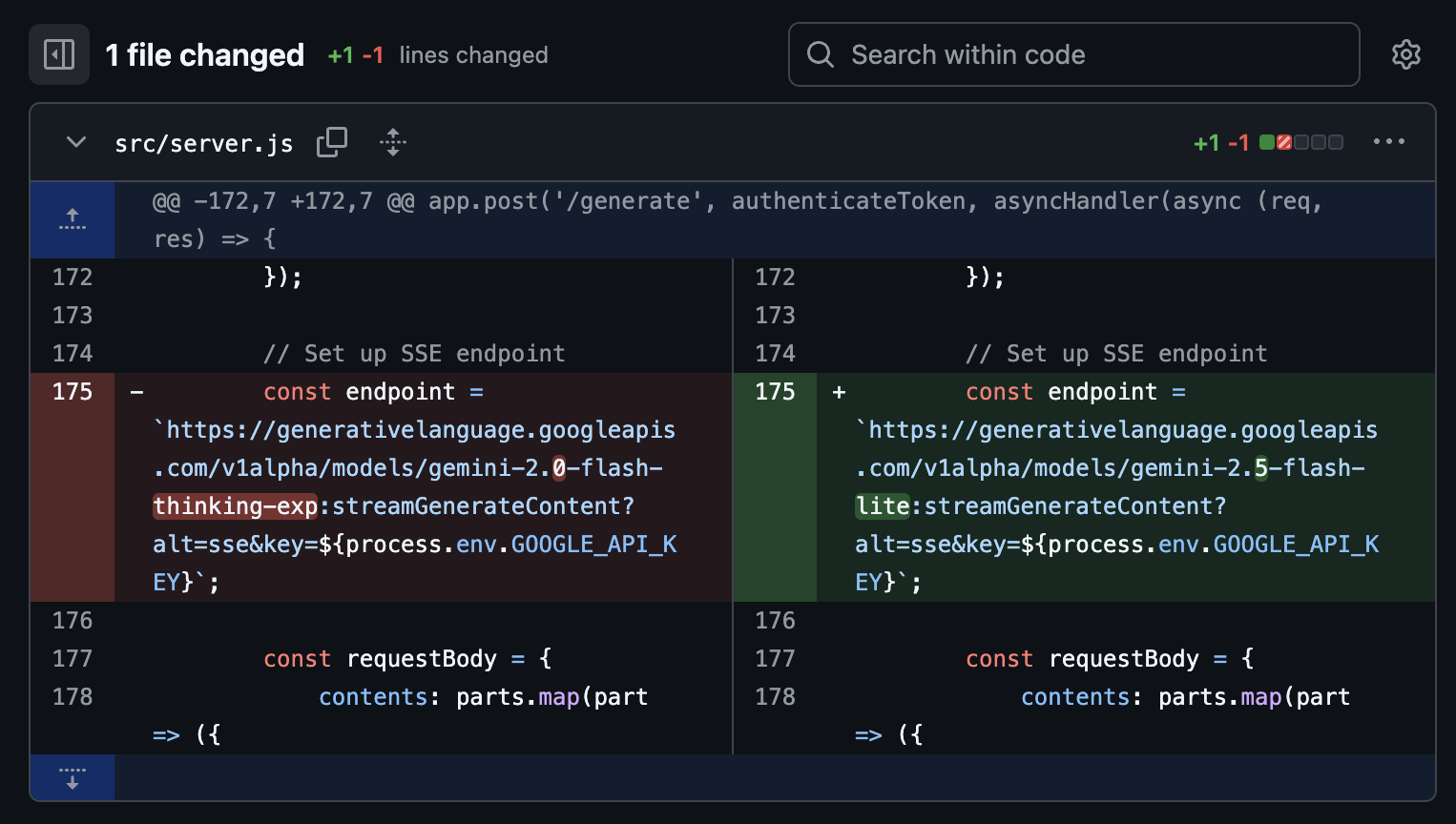

The most valuable change to Glotter Translate I’ve shipped so far wasn’t a feature. It was an upgrade patch—replacing the brain of my translation app with a single configuration change. When a stronger model arrived, I didn’t rewrite; I just switched. Overnight, “pretty cool” became “can’t live without it.”

Durable products in a fast-moving world

In fast tech cycles, durability doesn’t mean freezing the stack. It means designing for graceful replacement. With AI, that principle pays dividends: as models leap forward, the teams who planned for swappability convert hype into product-market fit.

The evaluation loop

“Newer” doesn’t always mean “better for me.” I run each candidate through a minimal eval loop:

- Quality: rubric scoring for translations.

- Latency: p50 and p95 under realistic concurrency.

- Cost: dollars per successful task (not per 1K tokens).

- Safety & drift: basic red-team prompts and regression tests on past failures.

If a model clears the bar, it graduates to a canary release behind a flag. Many don’t. The ones that do feel like cheating.

The payoff: compounding improvements

What users experience is the sum of model behavior plus product preferences. Model upgrades let you improve both quality and latency. The surprise isn’t that models improve; it’s how much your product improves. My “one-line” swap brought better translations and a more snappy experience. I didn’t change the promise of the product—only its likelihood of keeping that promise.

Ship the glide path, not just the feature

Traditional software ages like wood—you maintain it, sand the rough edges, and keep water out. AI software ages like a service—new models arrive, and your product inherits their capabilities if you’ve designed for swappability.

In the AI era, the best investment isn’t another widget—it’s the architecture choices that lets you catch every tailwind. Build for the upgrade, and your smallest PRs will do your loudest storytelling.