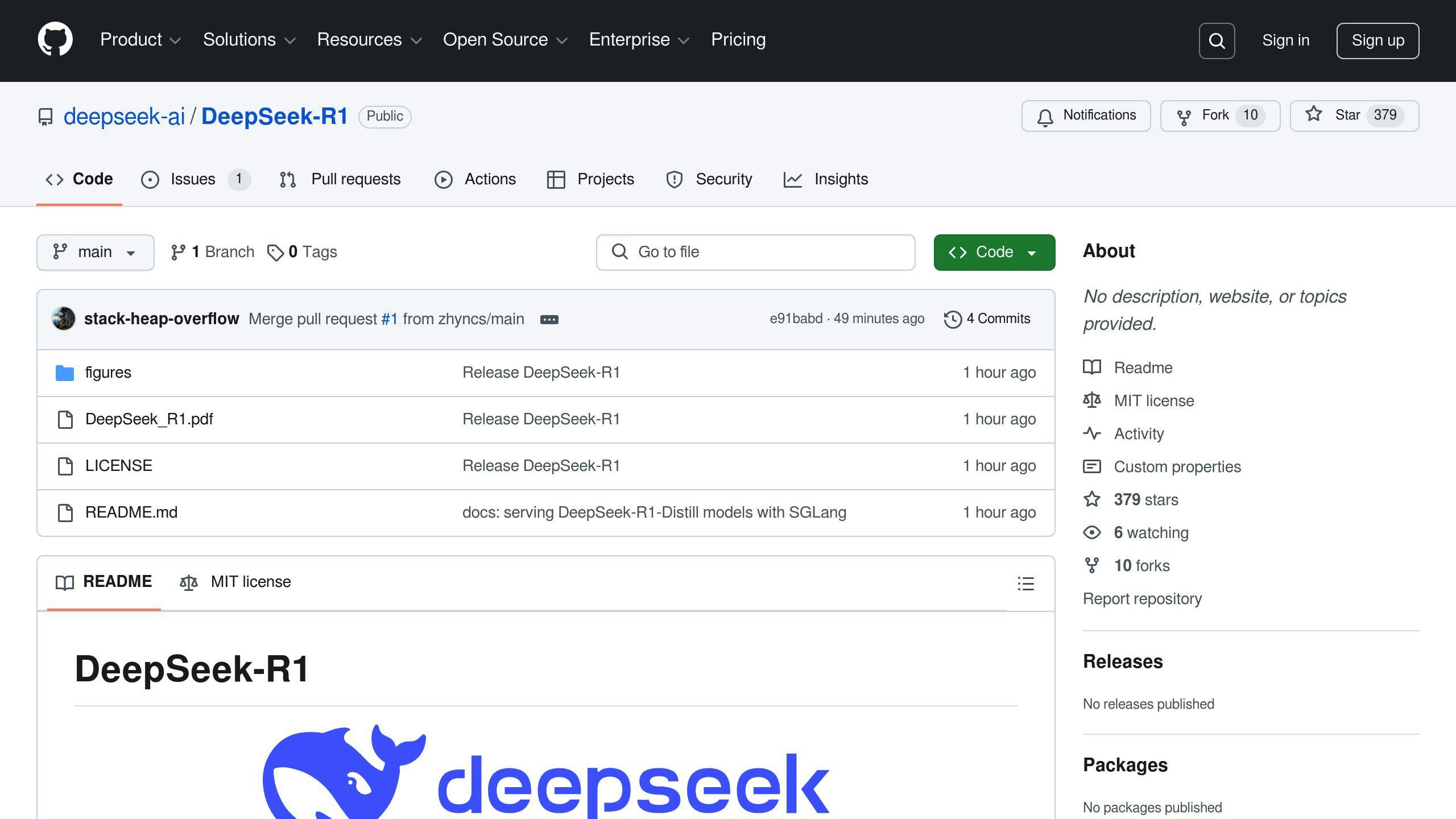

DeepSeek R1, launched in January 2025, is the first open-source AI model to match and even outperform OpenAI‘s proprietary o1 in several benchmarks. Here’s what you need to know:

- Performance: DeepSeek R1 scores 52.5% on AIME problems and 91.6% in high school math, surpassing o1’s 44.6% and 85.5%, respectively.

- Transparency: It provides clear, traceable reasoning steps, unlike o1’s non-transparent processes.

- Efficiency: DeepSeek R1 focuses on smarter design rather than massive resource use, making it scalable and adaptable.

- Open-Source Advantage: Community-driven development allows faster innovation and collaboration, challenging the proprietary AI model landscape.

Quick Comparison

| Criteria | DeepSeek R1 | OpenAI o1 |

|---|---|---|

| Performance | 52.5% (AIME), 91.6% (math) | 44.6% (AIME), 85.5% (math) |

| Reasoning | Transparent, step-by-step | Non-transparent |

| Development | Open-source, community-driven | Proprietary |

| Speed | Faster responses | Slower, up to 1 min delay |

| Scalability | Efficient across setups | Limited by proprietary design |

DeepSeek R1 demonstrates that open-source models can rival proprietary systems, offering flexibility, transparency, and competitive performance for complex tasks.

DeepSeek R1 Model With Deep Think

1. Key Features of DeepSeek R1

DeepSeek R1 uses reinforcement learning instead of the standard fine-tuning approach, allowing it to develop reasoning skills more naturally. This method is particularly effective for tackling complex mathematical and logical problems.

Performance Metrics

DeepSeek R1 achieves 66.7% accuracy on AIME problems when using over 100,000 tokens, compared to just 21% accuracy with fewer than 1,000 tokens. This highlights how the model benefits from extended reasoning chains and scales efficiently [2].

| Token Usage | AIME Accuracy |

|---|---|

| < 1,000 tokens | 21% |

| > 100,000 tokens | 66.7% |

| Optimal Range | 52.5% (avg.) |

Architecture and Processing

DeepSeek R1’s design is tailored for solving complex problems by enabling it to:

- Handle multiple aspects of a problem at once

- Produce detailed, step-by-step solutions

- Validate its conclusions through internal checks

Transparency and Efficiency

One of the standout features of DeepSeek R1 is its ability to provide clear, step-by-step reasoning. This transparency makes it easier for users to understand and trust its outputs [4]. The model also incorporates reflection and verification during problem-solving, making it particularly effective for intricate tasks [4][6].

Scalability

DeepSeek R1 scales impressively. Even smaller, more efficient versions of the model deliver strong performance [5]. This adaptability makes it suitable for deployment across a variety of computing setups [2].

Developed with input from the community, DeepSeek R1 showcases the potential of open-source models to compete with proprietary systems like OpenAI o1, which will be discussed in the next section.

sbb-itb-5392f3d

2. Key Features of OpenAI o1

Performance Metrics

OpenAI o1 delivers 86% accuracy in pro mode and 78% in standard mode on AIME. It also reduces major errors by 34% compared to o1-preview, marking a notable improvement in reliability [3].

Architecture and Processing

The model uses reinforcement learning and chain-of-thought prompting to tackle complex problems more effectively [3]. Impressively, it requires 60% fewer tokens to achieve the same reasoning quality as o1-preview, which helps reduce latency [7].

Safety and Transparency

Safety and transparency are strengthened with chain-of-thought summaries, achieving a 0.92 score in safety tests. This includes robust defenses against jailbreak attempts [3]. Additionally, the model demonstrates 94% accuracy on unambiguous questions, showing progress in reducing bias [3].

Scalability and Integration

By integrating with Azure OpenAI Service, o1 offers:

| Feature | Capability |

|---|---|

| Vision Processing | Analysis of uploaded images |

| Function Calling | Integration with external systems |

"The o1 model is specifically designed to ‘think’ before responding, meaning it doesn’t just generate text but goes through multiple steps of reasoning to solve complex problems before responding." – Louis Bouchard, AI Analyst [8]

While o1’s reasoning-focused approach can result in slower response times compared to earlier versions [3][8], this trade-off allows for more detailed and accurate problem-solving. When compared to DeepSeek R1, o1 showcases strengths and weaknesses that highlight its unique capabilities.

Strengths and Weaknesses of Both Models

Analyzing DeepSeek R1 and OpenAI o1 reveals clear differences in their strengths and limitations. Their distinct architectures and capabilities make them suitable for different applications.

| Criteria | DeepSeek R1 | OpenAI o1 |

|---|---|---|

| Processing Speed | Faster execution times; avoids delays of up to one minute [9][11] | Slower responses, with delays reaching up to one minute [11] |

| Code Generation | Limited documentation on its capabilities | Performs well in creating functional applications but needs human oversight [10] |

| Problem-Solving | Struggles with tasks requiring complex logic [12] | Excels in mathematics and structured problems, achieving 86% accuracy in pro mode |

| Flexibility | Open-source design allows for quick adjustments [12] | Proprietary structure limits flexibility, though updates aim to add features like web browsing [3] |

| Reliability | Requires more thorough testing and validation [10] | Faces challenges with nuanced reasoning and edge cases [10] |

DeepSeek R1 stands out for its faster response times, making it a better choice for tasks where speed is critical. On the other hand, OpenAI o1’s focus on reasoning allows it to excel in structured problem-solving, particularly in complex math tasks. However, this focus on accuracy can create challenges:

"The o1 model’s reliance on training data raises concerns about its reliability in critical applications, particularly when handling edge cases or highly specific instructions" [10]

DeepSeek R1 benefits from its open-source nature, which allows it to quickly adapt to new challenges [12]. However, its relative lack of testing means it hasn’t yet achieved the level of refinement seen in OpenAI o1. Both models also face difficulties with spatial reasoning, pointing to a broader industry challenge in this area [1].

Ultimately, the choice between these models depends on the specific needs of a project. Organizations prioritizing accurate code generation and mathematical reasoning might lean toward OpenAI o1, despite its slower performance. Meanwhile, teams requiring faster responses and flexibility may prefer DeepSeek R1, even though it struggles with complex reasoning tasks.

These differences underline how each model is tailored to serve specific purposes, shaping their roles in the evolving AI landscape.

Final Thoughts on DeepSeek R1 and OpenAI o1

DeepSeek R1 performs exceptionally well in mathematical reasoning benchmarks, offering a compelling open-source alternative to proprietary models like OpenAI o1. Instead of focusing on scaling parameters or data, this model emphasizes detailed reasoning, marking a shift toward efficiency and transparency in AI development [2].

Its open-source nature allows for quick innovation and customization, making it easier to adapt for specific industries. This approach challenges proprietary models like OpenAI o1 and changes the competitive landscape of AI by making advanced tools more accessible [1]. By embracing this open framework, DeepSeek R1 not only competes with established players but also influences the direction of AI development.

Both models have unique strengths. DeepSeek R1 shines in tasks requiring detailed reasoning and solving mathematical problems, while OpenAI o1 leads in grammar, coding, and some computational tasks [1][13]. Their competition drives progress in creating more efficient, transparent, and specialized AI tools.

This rivalry pushes advancements in architecture and domain-specific applications. By encouraging tailored solutions for various industries, these models contribute to a more diverse and accessible AI ecosystem [1][2].